[ Tuesday, April 23, 2024 ]

United Healthcare: It's been a bad spring for UHC: their pharmacy order and clearinghouse subsidiary Change Healthcare suffered one of the most impactful cybersecurity events in healthcare, resulting in delayed prescription deliveries and payment processing for providers and plans. We are now learning that hackers from the AlphV hacker group (also referred to as BlackCat) apparently accessed Change's systems February 12 and began stealing data. On February 21, AlphV detonated a ransomware bomb that encrypted and froze the bulk of Change's system, basically shutting down Change's claims processing and clearinghouse function, along with its Optum affiliate that processes pharmacy orders. UHC has now announced that the data was stolen and is now being disclosed by the hackers.

Wired magazine reported that Change paid $22,000,000 in ransom to get the hackers to return or destroy the data. Now, UHC is announcing that the hackers are disclosing the data anyway. Who would've thought hackers wouldn't honor their promises?

Jeff [8:20 AM]

[ Wednesday, April 17, 2024 ]

Tracking Technologies: In the latest news on the use of website tracking technologies such as Google Pixel, Monument Health has entered into a settlement agreement with the FTC to not use the technology in a way that could leak its patient's PHI to advertisers.

Technically, the pixels allow technology companies to track behavior of website visitors, such as by tracking where they go on a website. It helps the website owner know what services people are interested in, what web page language seems to attract visitors, and other information that can help the website owner improve its business.

A user's behavior on a website is not always PHI, but it could be: someone could look at a website for a particular disease because they are curious about it, are researching it, or have a friend or family member who has the disease; however, it's also fairly likely that when someone clicks on a link that says, "if you have health condition X and are interested in treatment options, click here," clicking on the link is at least closely correlated to the person having the condition, which is PHI.

The company offering Pixels and other tracking technology helps the website owner improve its own website and business; however, the technology company also might use the information to direct advertisers (including its own advertising options). If someone using a particular computer, phone, or other internet-accessing devise visits a particular website that is associated with a particular subject matter, type of product, or activity, then the user of that computer is much more likely to be someone interested in related products and services; knowing who those people are is valuable to advertisers.

Let's assume a particular smartphone web browser regularly searches for images and information on deer hunting. If a business sells deer hunting supplies and puts together game hunts, that business would really want to advertise to whoever is using that smartphone. On the other hand, a business involved in animal rights and veganism would not want to waste its marketing dollars contacting that smartphone user.

The effect to the customer can be creepy: it looks like the website is spying on me. And when the subject matter is healthcare, it becomes a question: did the company hosting the tracking technology disclose PHI from the user who was searching the healthcare matter?

Not necessarily; the fact that person X looks up healthcare service Y does not mean that person X has condition Y. HOWEVER, there is definitely a correlation, and in some cases a direct connection.

More will come from this.

Anyway, that's the reason these tracking technologies are such a hot-button issue.

Jeff [7:43 AM]

[ Sunday, March 31, 2024 ]

Another "Right of Access" Settlement: OCR has entered into its 47th settlement with a HIPAA covered entity or business associate accused of failing to grant an individual access to his/her PHI. As you know, in addition to 5 other rights specifically granted to individuals under HIPAA, except for a few specific types of data, covered entities and business associates must allow individuals to access and get a copy of their PHI, if it's in a designated record set. A few years ago, OCR started vigorously enforcing this, and it doesn't look like they're going to stop any time soon. This time, the fine is $35,000, in line with recent right-of-access settlements.

There are a few reasons why a covered entity won't give an individual access to their PHI, but many times it's not a good reason (the covered entity doesn't want to make it easy for a patient to find another provider). Take this as fair warning -- if the patient asks, give them the data, unless you have a VERY good reason.

Jeff [9:15 AM]

[ Wednesday, March 20, 2024 ]

Do Healthcare Organizations Cheap Out on Cybersecurity Spending? That's the question Modern Healthcare asks (subscription required). Based on a survey from last year, healthcare is one of the chintziest industries when it comes to spending on cybersecurity. It kinda shows, doesn't it?

Jeff [11:31 AM]

[ Thursday, March 14, 2024 ]

Ransomware Hits Healthcare Harder: If you've been living under a rock, you may not know this, but healthcare is the hardest-hit industry as far as ransomware and cybersecurity issues go.Jeff [10:29 AM]

HHS steps in: HHS has started its own investigation into the Change hack; expect a record-setting fine. I'll predict at least $25 million, possibly over $100 million to break the 9-digit barrier.

Jeff [9:23 AM]

[ Monday, March 11, 2024 ]

Change Cyberattack: I guess everyone's finally going to learn what a "health care clearinghouse" is. They've always been the "other" entity that's a covered entity under HIPAA

Jeff [10:10 AM]

[ Tuesday, March 05, 2024 ]

HHS Statement on Change Healthcare Cyberattack: In HIPAA-adjacent news, . . .

Unless you've been buried in a snowbank somewhere, you've probably heard that United Healthcare's technology/service/clearinghouse unit Change Healthcare suffered a cybersecurity incident that has severely affected its timely processing of data and claims. HHS has issued a statement, outlining that it is in contact with Change and has instructed MACs and other entities to try to assist those whose cash-flow has been adversely affected.

The key take-away from the entire Change fiasco is that the system is not so interconnected that an incident at a single point can nearly destroy the entire system. This is the proverb, "but for a nail, the kingdom was lost" brought to life. The fact that it comes on the heels of the pandemic, where we saw how that implementing efficiencies such as offshoring and just-in-time inventory may save money, but they add a great risk that widespread disruption could be caused by any type of problem.

Jeff [1:16 PM]

[ Monday, February 26, 2024 ]

LaFourche Medical Group pays $480,000 to settle ransomware attack affecting 35.000 patients: An emergency and occupational medicine practice in Louisiana was a ransomware victim in 2021, the result of a successful email phishing attack. While it does not appear that the attack involved encryption, it did allow the hacker to access patient information, which gave the attacker the ability to seek a ransom payment for the return of the PHI.

Unsurprisingly, OCR cited lack of risk analysis and lack of sufficient policies and procedures as the basis of the fine.

Jeff [9:21 AM]

[Note: This should have been posted early January -- I just noticed it was still in Draft]

HHS announces data blocking penalties: The information blocking rule (IBR) is part of the 21st Century Cures Act, which itself is sort of a hodge-podge of a law addressing a bunch of different healthcare research and IT related matters. Of course, the Cures Act itself follows in a long line of healthcare policymaking that is both omnibus in presentation and reactive and/or deductive in focus.

Remember, HIPAA started out as a law intended to force insurance companies to provide coverage to an applicant who had similar insurance in the immediate months prior. One way to "scam" insurance is to not participate when you are healthy and only buy it when you are sick, which it the practical equivalent of not buying fire insurance until your house is on fire. If you can do so, you avoid paying into the insurance risk pool when you'd lose money, and only pay in when you'll get more back. In other words, you're "free-riding" on other insurance purchasers.

It's understandable that insurers want to prevent free-riders, and one way to do it is by refusing to cover pre-existing conditions. If you don't buy insurance until you're sick, and then show up at the insurer's door with an expensive illness, the insurer will say, "OK, you're covered, but not for what you already got." That's fair. However, what if you didn't game the system, you weren't a free-rider: you had insurance previously, but you just need new insurance because (e.g.) you got a new job. For the insurance company, it's still a pre-existing condition, but it's not fair to the insured. Ultimately, for a lot of people, the pre-existing condition hurdle meant they were stuck in their current job and couldn't take a better one. That's "job-lock."

HIPAA was originally drafted to target job-lock: if you had "creditable" health insurance coverage within the last 6 months, a new insurer can't deny you for a pre-existing condition. Remember, the first 2 letters of HIPAA don't stand for health information privacy, but for health insurance portability. It's a great idea that every politician could support. However, great ideas get other ideas attached to them, ideas that might not pass into law on their own, but would pass if they were attached to a great idea.

Several new foci got attached to HIPAA's portability provision, some with merit but none universally supported. First, regulators wanted the healthcare industry to be more efficient. At that time, healthcare was a laggard in adopting information technology; most healthcare providers used primarily paper records, and a large portion of billing was done on paper (and that done electronically was done using multiple systems with no coherent or consistent programming logic). The drafters of HIPAA thought that if all electronic transactions in healthcare were standardized, more people would bill and pay electronically, and the system would be more efficient. Thus, the transactions and code sets (T&CS) rule was adopted.

However, if all that data is going to be digitized and sent electronically, the data would be at much greater risk in electronic format than in paper format (you can't make money trying to steal paper records, and a breach of a physical paper storage room is a lot easier to catch and prevent). If we're going to encourage electronic data interchange in healthcare, we also need to ramp up data privacy and security practices. Thus, the privacy and security rule were adopted.

You see, Portability begat T&CS standards, which in turn begat Privacy and Data Security standards. And you know that the HITECH Act contains a lot of HIPAA updates and revisions, including the data breach reporting standards.

One of the main foci of the HITECH act (remember, the title is "Health Information Technology for Economic and Clinical Health") was the "meaningful use" rule: the encouragement/forcing of healthcare providers to adopt electronic medical records (EMRs); this was actually a follow-on to the genesis of HIPAA's transaction and code sets, as well as the data privacy and security requirements. While the T&CS rule was intended to entice the industry to become more digital, not enough providers moves in that direction, particularly small health providers. Many continued their paper ways. Congress knew that one way to get them to move would be to give them money to do so: if a healthcare provider uses electronical technology in a meaningful way (i.e., becomes a "meaningful user" of it, i.e. adopts an EMR), CMS will pay it money; if it does not, CMS will reduce what it pays for Medicare and Medicaid patients.

The IBR is intended to address an issue that has come up with regard to EMR companies intentionally designing their systems to be less-than-fully compatible with other EMRs.

Hospitals, medical groups push back against penalties

Jeff [9:12 AM]

Second OCR Ransomware Incident Settlement Announced: OCR has entered into a settlement agreement relating to a ransomware incident, this time a fine of $40,000 for Green Ridge Behavioral Health.

Lack of a Risk Analysis, lack of sufficient security measures, and a failure to monitor system activity were cited as reasons for the fine, which is a pretty common theme for OCR fines.

OCR's press release on the matter included specific actions it expects HIPAA covered entities to take to prevent incidents (and avoid fines if they do happen). These align with the recommended security practices that Section 405(d) of the Cybersecurity Act considers "mitigating factors" when regulatory action is taken"

"OCR recommends health care providers, health plans, clearinghouses, and business associates that are covered by HIPAA take the following best practices to mitigate or prevent cyber-threats:

- Reviewing all vendor and contractor relationships to ensure business associate agreements are in place as appropriate and address breach/security incident obligations.

- Integrating risk analysis and risk management into business processes; and ensuring that they are conducted regularly, especially when new technologies and business operations are planned.

- Ensuring audit controls are in place to record and examine information system activity.

- Implementing regular review of information system activity.

- Utilizing multi-factor authentication to ensure only authorized users are accessing protected health information.

- Encrypting protected health information to guard against unauthorized access.

- Incorporating lessons learned from previous incidents into the overall security management process.

- Providing training specific to organization and job responsibilities and on regular basis; and reinforcing workforce members’ critical role in protecting privacy and security. "

Jeff [8:46 AM]

[ Thursday, February 15, 2024 ]

Employers can be Blamed for Bad Employees: I don't know of any HIPAA covered entity or legitimate business associate that actively pursues bad data policies. Hospitals don't intentionally violate their patients' data privacy; physician offices don't operate with the goal of stealing their patient's data and selling it to hackers. And when hackers do gain access and steal data, they usually encrypt the data and hold it hostage, forcing the HIPAA covered entity or business associate to pay to get the data back. Thus, the hospitals and physicians and business associates are, in fact, victims of the bad guys.Jeff [3:20 PM]

Hospital Cyberattacks Continue to Rise: This should come as a surprise to nobody, but the biggest data risk to pretty much everyone in the healthcare industry is the risk of cyberattacks, particularly ransomware. I have had several clients who have suffered ransomware attacks. These always disrupt care to some extent, and fortunately my clients have not suffered any patient care problems, but others have. However, they all have had to spend extremely large sums to fix the problems, and many have suffered the follow-on effect of the class action lawsuit by patients whose data was involved.

If you aren't focusing on this now, you need to.

Jeff [12:58 PM]

[ Wednesday, January 10, 2024 ]

OCR Lies. I usually have good things to say about OCR. For the most part, it's full of good people trying to do good things, and the investigators are probably the nicest enforcement people in the entire government: they really want to help healthcare providers get better and often give the benefit of the doubt to healthcare workers who are really trying to do the right thing but don't always get it right.

But whenever the Xavier Becerra hack-machine gets involved, you can count on things going off the rails, and yesterday brought a sterling example.

It is an indisputable fact that good people can disagree on abortion, but it's equally indisputable that people who believe abortion is murder should not be forced to participate in performing abortions. But it happens, or at least it did prior to a 2019 rule from HHS threatening hospitals with removal of federal funding for forcing objecting employees to participate in abortions or other acts that violate their legitimate religious beliefs.

Yesterday, HHS, through OCR, rescinded that rule. I guess you could quibble that the rule might have given employees too much leeway to refuse to do legitimate work that shouldn't be objectionable, or that full removal of federal funding was too big a penalty, and a $100,000 or $1 million fine would do the trick. But rescinding it entirely?

And even worse, bragging that REMOMING those conscience protections is actually INCREASING them? Here's the header of OCR's press release:

And here's the headline and lede of an article in The Hill, which is certainly left-leaning:

Jeff [9:26 AM]

[ Monday, January 08, 2024 ]

New Jersey medical practice Optum Medical Care has settled an OCR investigation regarding Optum's failure to grant patient access to medical records, agreeing to pay $160,000.

Jeff [12:20 AM]

[ Thursday, December 28, 2023 ]

ESO, CVC, HEC Disclose Data Breach: ESO, a healthcare software company serving hospitals, EMS entities, and governmental agencies, announced a ransomware-triggered data breach affecting 2.7 million individuals.

Cardiovascular Consultants of Arizona also announced that it suffered a cyberattack that affected almost half a million patients.

Finally, New Jersey based population health management company HealthEC also announced a cybersecurity incident of 112,000 individuals.

All of these are offering credit monitoring to affected individuals.

Jeff [8:42 AM]

[ Tuesday, December 12, 2023 ]

Norton Healthcare Hack Exposes Data of 2.5 Million Patients. The hackers accessed some of the Louisville hospital system's data storage, but not the EMR or MyChart.

Jeff [7:52 AM]

[ Monday, December 11, 2023 ]

US health officials call for surge in funding and support for hospitals in wake of cyberattacks that diverted ambulances. Of course, some of the "funding and support" is imposing stricter fines for providers who have lax cybersecurity.

Some amount of cybercrime is inevitable. However, there still is a shocking lack of cybersecurity among healthcare providers. Patching (regularly applying software patches when they are issues by the software providers), good data backups, network segmentation (keeping secure parts of your network -- which don't need internet connections -- separated from the parts of the network that do need internet connections), and phishing training can eliminate the vast majority of cybersecurity incidents. If you're not doing that, you probably deserve stricter fines.

Jeff [10:04 AM]

[ Tuesday, November 21, 2023 ]

St. Joseph's Medical Center Settlement: During the height of Covid, St. Joseph Medical Center allowed a reporter and photojournalist access to its operations as part of a story about hospital overcrowding and St. Joseph's response to swelling numbers of Covid patients. Some pictures of patients apparently made it into the newspaper, and according to OCR, some information about St. Joseph's patients. OCR has now entered into a settlement agreement with St. Joseph's regarding the incident.

St. Joseph has admitted no liability in making the settlement.

The settlement involves an $80,000 fine, a review and possible revision of St. Joseph's HIPAA policies (to be reviewed by OCR, and a 2-year oversight plan by OCR. That's not a big penalty; I'd be extremely surprised if St. Joseph spent less than $80,000 on attorney's fees in conducting its own investigation and response, much less what it might've spent on other consultants to address the investigation. All HIPAA covered entities should be reviewing their policies and procedures regularly, and most would love to have OCR review them and give their blessing or offer tips for useful revisions. The 2-year monitoring could be a bit of a pain, but it's shorter than the usual 3-year plan seen in most settlements.

At this point, I have not seen a response from St. Joseph's, nor have I seen copies of the AP story that made the press, but I suspect that there is a legitimate question about whether PHI was actually disclosed in the article. I suspect the photos do not show patient faces, and any individual information was nearly if not entirely de-identified. However, it is entirely possible that the reporter was exposed to at least a minimal amount of PHI when he/she was allowed access to non-public areas where patients were gathered, and likely that the hospital didn't get consent from all of those patients before allowing the access. Still, that's pretty thin gruel.

However, the case is another reminder of the risks a health care entity takes when dealing with the press. While St. Joseph's probably saw the reporter's request for access and information as an opportunity to tell their story and put on a good face, covered entities must be extremely careful bout what information gets out.

Jeff [8:51 AM]

[ Wednesday, November 15, 2023 ]

Perry Johnson & Associates, a medical transcription service, has apparently suffered a data breach involving a hacker gaining access to its computer systems. Not much is known at this point, but I'll update you as more information comes in.

Jeff [8:24 AM]

[ Friday, November 03, 2023 ]

AHA sues HHS to stop OCR guidance on web trackers. This is super-inside-baseball HIPAA stuff, folks. And it has a chance of taking hold.

Here's the background: many websites use some type of technology to track user behavior on the website. There are tons of legitimate reasons why you would want to do this: If every visitor to one part of your website clicks the same link, or otherwise acts in a non-random way, you want to know it. For example, lets say you offer weight loss services and have a page with many different choices (exercise programs, diet counseling, Ozempic, psychedelics, etc.), and you have an equal number of staffers working to provide each choice. But you find out from tracking technology that 90% of your visitors all go to the Ozempic page, but nobody ever clicks on exercise. If you're running your business responsibly, you'll switch the exercise employees over to the Ozempic team. But you might never know that website visitors are behaving that way without a tracker.

One of the ways trackers work is by tracking the visitor's choices to the particular visitor, usually by the specific signature of the user's computer or other device that connected to the website (for example, the user's cell phone or iPad). The company that provides the tracking technology also uses the information they gather to fine-tune its algorithms for their healthcare provider customer, but also uses the information for other purposes, such as the marketing services it sells to other customers.

Here's the problem: the device ID isn't necessarily the person who owns it (multiple people could have access to and use the same iPad), and the behavior of the person doesn't necessarily tell you anything specific about the person (I could be looking at information about a particular disease not because I have it, but because I know someone who does and I'm curious). However, it's still a pretty good proxy. If I go to a weight-loss website, I'm probably looking to lose weight; if I go to a diabetes website, the odds are pretty good that I'm a diabetic. And if my computer goes to the website, it's probably because it's me that's operating it. Thus, you can deduce, not with certainty but with some high level of likelihood, that if my cell phone accesses a website for X disease, I have that disease. HOWEVER, is data that's simply indicative of health status PHI? How tight does the connection need to be?

And therein lies the problem -- the information derived from the tracking technology COULD be PHI, and letting the technology company have access to that information would make the vendor a business associate. The vendors don't want to be restricted in how they use that data.

OCR has declared (in a December 2022 bulletin) that providers that use tracking technology must have BAAs with those vendors, but those vendors won't sign BAAs. The end result is that big hospital systems are prevented from using a technology that can streamline their processes, save them money, and allow them to better serve their patients. Hence the AHA's actions.

This will be interesting.

(11/3/23/)

UPDATE 11/9/23: Interesting press release from AHA and other hospital associations relating to its suit against HHS relating to web trackers. According to Bloomberg Law (subscription may be required), HHS uses the same tracking technology on its websites that HHS guidance warns hospitals about as being potentially violative of HIPAA. Interestingly, I also learned in that article that hundreds of class-action lawsuits have already been filed against hospitals for using the technology in violation of HIPAA.

This isn't the end of the story, of course: HHS isn't a HIPAA-covered entity (although Medicare and Medicaid are), and people searching the HHS website usually aren't looking for specific medical conditions or providing the same type of information as a visitor to a hospital site might. However, from a general privacy standpoint, it's an interesting point of hypocrisy.

Jeff [9:40 AM]

[ Wednesday, November 01, 2023 ]

OCR Fines Ransomware Victim due to HIPAA breaches: Doctors' Management Services (DMS), a management company that serves as a business associate of covered entity physician practices, has been fined $100,000 by OCR for failure to do a sufficient Security Risk Analysis (SRA), lack of policies and procedures, and failure to monitor system activity (all the usual suspects).

DMS was itself a victim: a criminal hacker caused the incident. But DMS still got hit with a big fine because they didn't take the steps needed to avoid being a victim in the first place.

Some covered entities that are ransomware victims get fined, and others don't. Both groups suffer from the incident, but the second group (ones with good SRAs, policies and procedures, and monitoring) is much less likely to get fined. Just ask me -- I have personal experience with this!

UPDATE: Thanks to Theresa Defino at Report on Patient Privacy, DMS has had a chance to tell their side of the story. As I noted in my original post, DMS was a victim here. I noted that "they didn't take the steps," based on OCR's press release. Now, I'm thinking maybe OCR overreacted, but I haven't actually talked to DMS.

The point here, though, is that OCR's stated list of wrongdoing is the same list that's applicable to almost every other case involving a fine (other than the access cases). You want to be able to prove that you have done your SRA, have good policies that you follow, and monitor your system activity.

Jeff [4:03 PM] HHS proposed penalties for information blocking: In addition to stated penalties that can be up to $1,000,000, HHS is proposing that health systems engaging in information blocking (prohibited by the 21st Century Cures Act) be additionally punished by losing "meaningful use" funds, MIPS payments, the ability to share in MSSP payments pursuant to an ACO.

Jeff [3:43 PM]

Did you know HHS has a YouTube channel? Here's a recent posting explaining how your HIPAA Security Rule compliance activities will also help you avoid a cyberattack.

Obviously, if you've read anything on this site, you know that failure to do a Security Risk Analysis (which is specifically required by the Security Rule) is the number one thing that OCR cites when issuing fines. This makes sense, because (i) it's the number one thing that will help prevent you suffering a breach or other incident, (ii) a breach/incident is usually the thing that leads to an OCR investigation, and (iii) an investigation that shows failure to do a SRA will often end up with a fine and a compliance agreement.

Just as importantly, a cyberattack can ruin your business, and it's never good for your patients. Best to take the appropriate steps to avoid them.

Jeff [3:35 PM]

[ Sunday, October 29, 2023 ]

Cybersecurity Toolkit for Healthcare: HHS and the Cybersecurity and Infrastructure Security Agency (CISA) have joined forces to publish a toolkit to assist healthcare industry work with governmental agencies to "close gaps in resources and cyber capabilities." The toolkit is here; I haven't reviewed it, but it promises to "contain remedies for health care organizations of all sizes."

Jeff [4:04 PM]

Spooky: OCR is hosting a Halloween webinar on the HIPAA Security Rule's risk analysis requirement. At 3:00 Eastern time (the invite says EST, but I think it's EDT) on Tuesday, October 31, an OCR panel will discuss how to conduct a risk analysis. Trust me, you want to be doing what OCR thinks you should be doing; it makes it so much less painful to explain how the breach you suffered wasn't your fault. And there's no better way to find out what OCR thinks you should be doing than listening to them explain what you should be doing.

You can register for the webinar here: Webinar Registration - Zoom

Jeff [3:10 PM]

Ransomware: the Biggest Threat. According to research by NCC Group, ransomware attacks were up dramatically in September 2023, both from the preceding year (153%) and, within the healthcare sector, from the preceding month (89%). It's relatively easy to do, and many victims have no option but to pay.

Patching, MFA, and training can prevent ransomware attacks, and good backups can make the ones that get through a lot less painful. Those are all easy things to do. . . .

Jeff [3:02 PM]

[ Tuesday, September 12, 2023 ]

LA Care Breach and Incident net $1.3M fine: Yesterday, HHS announced a settlement with LA Care, the public health plan run by Los Angeles County, relating to two prior incidents: A 2019 data breach involving 1500 patients whose membership cards were sent to the wrong member, and a 2013 incident involving about 500 people whose information was loaded onto a different patient's page on LA Care's online patient portal.Jeff [9:16 AM]

[ Monday, September 04, 2023 ]

iHealth/Advantum settles HIPAA FTP server breach for $75,000. I was going through some old emails and came across a HIPAA settlement that I don't think I mentioned earlier. And it's not an access settlement. It involves a business associate and an unsecured storage server (likely an FTP server). Interestingly, the breach was not a "wall of shame" breach.

Jeff [12:51 PM]

[ Friday, August 25, 2023 ]

UHC Takes a Hit for Denying Access to PHI: The ongoing effort of OCR to bring actions against HIPAA covered entities has tallied its 45th fine, this time with insurer United Healthcare paying the price. As with the other 44 instances, the fine is small by comparison to other non-access related HIPAA enforcement actions: $80,000, which roughly equates to UHC's revenue every 8 seconds. The complainant in this case actually filed 3 separate complaints against UHC, so it's likely there was at least a little fire behind that smoke.

The key takeaway: OCR is going to keep going after covered entities who don't give access to individuals who request their records. Access is a right, so give it.

Jeff [6:39 AM]

[ Monday, August 14, 2023 ]

No surprise: Data breaches in the healthcare sector are the most expensive.

Jeff [8:40 AM]

[ Tuesday, July 25, 2023 ]

Average Cost of a Healthcare Data Breach Continues to Rise: The average cost of a healthcare data breach is now $11 million, according to IBM and the Ponemon Institute. This is up $1 million since last year. Heathcare data breaches are also about 2.5 times as costly as in other industries.

Jeff [8:44 AM]

[ Tuesday, July 18, 2023 ]

HC3 issues brief on cyber risks of AI and ML: HHS' Health Sector Cybersecurity Coordination Center (HC3) has issued a brief outlining the cybersecurity risks of artificial intelligence and machine learning. If you don't know much about AI and ML, that's fine; most of the brief is background information, explaining how AI and machine learning work.

Jeff [8:02 AM]

[ Friday, June 16, 2023 ]

Snooping Results in Quarter Million Dollar Fine: Breach threats can be external (hackers and stolen data) or internal (lazy or ill-intentioned employees who lose or steal data). One of the more common types of insider breach incidents is snooping -- staff look at medical records they shouldn't, often records or a friend, family member, or celebrity, and usually out of curiosity. I often advise clients to be on the lookout for snooping, by flagging celebrity files so that any access is immediately reviewed and training medical records staff to pay particular attention to the records of known family members of staff. Warnings against snooping should be a regular part of HIPAA training, if the facility is such that family members of staff or celebrities are likely to be patients. And when snooping is detected, punishment should be relatively harsh, pour le encourager des autres.

Yesterday, OCR announced it had levied a $240,000 fine against Yakima Valley Memorial Hospital for a snooping violation. According to OCR's report, 23 members of the hospital's ER security staff accessed records of 419 individuals when they had no legitimate reason to do so.

The case is interesting in that at 419 affected individuals, it's likely that the incident was reported as part of Yakima Valley's annual reporting, and not reported when it occurred. It is unusual for OCR to issue fines for breaches this small, particularly with regard to a type of incident that is so common.

Jeff [12:48 PM]

[ Wednesday, June 07, 2023 ]

HHS fines NJ psychiatric provider for disclosing PHI when responding to a negative review. Manasa Health Center was fined $30,000 and required to enter into a corrective action plan with new training of employees and revised policies and procedures. Apparently, a patient complained about the practice in an online forum, and the psychiatric practice responded and defended itself, but in doing so, it exposed PHI of the patient.

I've previously posted on this subject, and on similar issues with covered entities inadvertently disclosing PHI while trying to defend themselves (some of the links have died from link rot, but you get the idea). You don't have to sit silently while a patient posts an unfair or false bad review; however, your response cannot include the patient's PHI (simply confirming that the patient is in fact your patient is PHI). There's no "he said it first" exception, nor does the fact that the PHI already been made public mean that the provider can disclose it again.

For example, if a patient states that he had an 8:00am appointment in November but wasn't seen by the doctor until 2:00pm, you could respond with a statement such as, "While we can neither confirm nor deny whether this individual is a patient, we time stamp all patient sign-ins and the start of all patient-provider encounters. We have reviewed all patient encounters during November and have not found any instance where the length of time between a patient's sign-in and the start of his/her physician visit was longer than 45 minutes." That response refutes the patient's claim without disclosing PHI.

Jeff [12:41 PM]

[ Friday, April 14, 2023 ]

End of the Public Health Emergency means end of HIPAA Enforcement Discretion. Been on Zoom or Teams lately? Worked from home? Telecommuted? The Covid Pandemic changed a lot of things about the way we work, including a dramatic increase in telehealth services. In healthcare (primary care particularly), there was firehose-level adoption of Zoom, FaceTime, and similar technology at the very early stages of the pandemic, as providers tried to find ways to keep their patients healthy without having them come to the office.

However, these new technologies raised potential HIPAA issues: were they safe enough? Had the adopting practices done sufficient due diligence understand the risks they posed to the confidentiality, integrity, and availability of the PHI that would be transmitted? OCR wasn't about to simply say "Zoom is HIPAA-compliant" (as any reader of this blog knows, that's not how that works); however, at the same time, OCR wasn't about to stand in the way of Covid-safe healthcare delivery.

As is usually the case*, OCR took a balanced, reasonable approach: if providers agree to take reasonable steps to layer on the best privacy and security safeguards they can, OCR will agree not to prosecute you for a HIPAA violation if you use one of these video technologies. They called it "enforcement discretion:" OCR will exercise the discretion granted to it to not prosecute Zoom and FaceTime users for HIPAA violations. Now, OCR didn't say Zoom or FaceTime were otherwise improper under HIPAA; keeping a neutral stance, they simply said that, for the time being, we won't hold it against a covered entity that they chose to use such a technology.

OCR made clear that this was a pandemic-related decision, and subject to the circumstances. That meant that, when the pandemic ends, so does the enforcement discretion. And lo, it came to pass, that the pandemic will officially end (as far as the federal government is concerned) on May 11, when the Public Health Emergency declaration ends. OCR will give covered entities and business associates 90 additional days (from May 12 through August 9) to become compliant. OCR's declaration is here.

Bottom line: if you are a covered entity and adopted Zoom or some other telehealth technology, now is a good time to take a look at how you're using it, and make sure it fits within your HIPAA policies and procedures. It would be a good idea to have a specific policy/procedure to address use of telehealth technologies (ask me if you need a form). Make sure you cover ALL instances where you use Zoom, especially if you use it for non-patient-care purposes -- for example, staff meetings where PHI is discussed.

It might also be a good idea to leverage off of that review to freshen up your overall HIPAA risk analysis. Are there other practices you adopted during the pandemic that might have HIPAA risk? It doesn't mean you have to actually change anything, and in fact you might be doing everything as safely as possible. But it's a good idea to look, because technology changes, as to threats.

* I've been accused of being a cheerleader or fanboy for OCR, but that's not true. I think the civil rights arm of OCR has been pretty lousy, with a heavy thumb on the scale for leftist woke claptrap and a clear bias against traditional religious rights. But the HIPAA enforcement part of OCR has really been a partner to the healthcare industry it regulates from the beginning of HIPAA in 1999-2000. I give credit where due, and when the government does something right, it deserves mention.

Jeff [8:39 AM]

Did you know that I have a blog? Good, because it seems I keep forgetting.

There's been a lot going on in HIPAA (comparatively) in the last few weeks, but I've not been very good at posting about it. I'll try to be better.

Jeff [8:08 AM]

[ Monday, March 13, 2023 ]

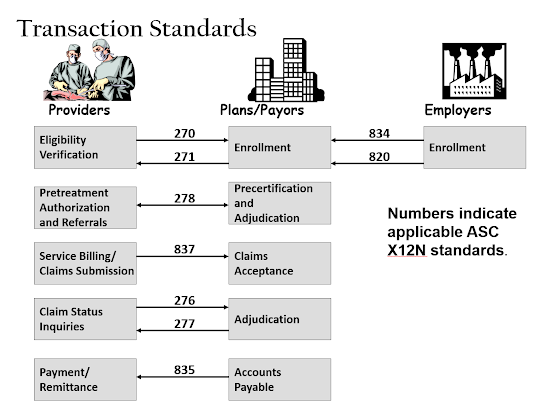

New HIPAA Standards for attachments and electronic signatures: I saw this in December and tagged it for blogging, but lost it in my overcrowded email folders. This is one of those super-insider-HIPAA deals, but CMS is proposing a new HIPAA standard transaction for attachments and e-signatures. As you may know (or not), one of the original purposes of HIPAA was to standardize (and thus simplify) many of the mundane daily transactions that providers and payors engage in when providing and paying for medical care. Originally, 9 separate transaction standards were adopted:

The new proposal relates to several of these, particularly 276-278 and 837. Attachments have always been a non-standard add-on to standard transactions, which in many ways defeats the purpose of the standardization. The new rule won't completely fix the non-standardized attachments problem, but will streamline a portion of the process. It'll take awhile for providers and payors to get the new processes rolled out, but once they're done, it should result in some cost-savings.

CMS' fact sheet is here, and the Federal Register posting is here.

Jeff [9:12 AM]

[ Thursday, March 09, 2023 ]

Anniversary: I meant to post something yesterday, but got a little distracted. As of yesterday, this blog is now old enough to drink in all 50 states. Yes, I started this blog on March 8, 2002. Hard to imagine.

Jeff [11:24 AM]

[ Tuesday, February 28, 2023 ]

HHS issues advisory on "Clop" strain of ransomware. Spring is in the air, and as regularly as the seasons changing, there's a new varietal of ransomware. This one is from a Russian group calling itself "Clop," which exploits a flaw in GoAnywhere. HHS and HSCCC have issued an alert. One thing that sets Clop apart is that it specifically targets the healthcare sector.

The usual defensive tactics apply: train your staff to avoid phishing, patch your software, manage your fenceline, backup your data, have your system mapped, use DLP, and put alarms on traffic flows.

Jeff [10:37 AM]

HHS is committed to reduce the backlog of HIPAA investigations. Complaints to OCR are now over 50,000 a year (2/3 of which are HIPAA-related), and OCR just isn't designed to meet that level of demand. So HHS s going to reorganize OCR, with specific divisions addressing policy, strategic planning, and enforcement. I don't think that's particularly useful.

Seems to me like OCR should be split into civil rights (discrimination and the like) and HIPAA/health data privacy and security. The HIPAA side should also be split, with policy, planning, and guidance on one side, and breach/complaint on another branch, and enforcement as a third division. The breach/complaint side should also be split into breach issues and non-breach issues (such as access).

At least that's how I'd do it.

Jeff [10:26 AM]

[ Wednesday, February 22, 2023 ]

The Lesson of Good Rx: Don't forget the FTC. Obviously, I tend to focus on HIPAA here, as do many HIPAA-covered entities (and HIPAA-adjacent but non-CE industry players). But the FTC's recent settlement with Good Rx over patient data handling practices should be a lesson. Good Rx used tracking pixels to glean data from patients, and allowed Meta and Google to access the data; that resulted in Good Rx users getting targeted ads based on the information they had given Good Rx (which Good Rx had stated in its privacy policies would be kept confidential).

According to recent guidance from HHS, the use of tracking pixels can result in a HIPAA violation, if (i) the pixel use results in disclosure of PHI and (ii) the recipient isn't a rightful recipient or there's no BAA in place. Tracking pixels are ubiquitous on webpages everywhere, since they are useful to the webpage owner to know what's working on their webpage and what isn't. And there's normally no problem with the webpage owner having that data; the problem is if the webpage owner shares that data with others, without warning the customer first.

Bad pixel use could easily result in a HIPAA enforcement action. But even if HIPAA isn't applicable, there's always the FTC.

Jeff [9:27 AM]

[ Friday, February 03, 2023 ]

Banner Health Settlement: It appears that Banner Health has reached a settlement with OCR over its 2016 hacking incident that potentially exposed PHI of almost 3 million people.

Jeff [3:25 PM]

[ Thursday, December 15, 2022 ]

New Vision Dental HIPAA violation: Thanks to Jamie Sorley for tipping me off to this at the Dallas Bar Association Health Law Section's holiday party last night (sponsored by Bradley -- thanks for the excellent tequila!), HHS has issued a settlement with a dental practice that doesn't involve access. The practice disclosed PHI on social media when responding to patient complaints and bad reviews. The good news for the practice, the fine was only $23,000.

It's tough when a patient posts a false negative review. But a provider has to be very careful that any response does not involve any disclosure of PHI. The safest route is to ignore it, but if you must respond, do so with global statements, not anything that could specify any particular patient. For example, if the patient says he/she had to wait 3 hours in the waiting room on the day before Thanksgiving, the practice could respond and say it reviewed all of its sign-in sheets and the time-stamp of every patient encounter during the month of November and the longest any patient waited between sign-in and being taken to an exam room was 45 minutes. That response does not disclose any patient's PHI. On the other hand, saying "Mrs. Jones says she waited 3 hours, but she signed the sign-in sheet at 1:30 and was in the chair at 2:15" would be an improper disclosure of PHI.

Jeff [2:45 PM]

Healthcare Industry Cybersecurity Advice: Last month, Sen. Mark Warner issued a white paper, "Cybersecurity is Patient Safety," on the current state of healthcare cybersecurity and ways to improve it. This month, the American Hospital Association has responded with a letter to Sen. Warner, providing a section-by-section response.

Ransomware, data breaches, and other cybersecurity issues are a huge problem in the healthcare industry. While care-denying ransomware attacks are relatively rare, healthcare is a critical data-driven industry that suffers much more than others when hit with an attack. A strong governmental and industry focus on cybersecurity is welcome.

But much of the advice relates to ways the government can spend more money, which is a premise that it's wise to question. The money wasted on the Covid response (not just the huge amounts of fraud, but the crippling effects of long-term unemployment insurance and deficit-ballooning cash grants to just about every business and government entity in sight -- many of which are now being spent on wasteful and unnecessary "infrastructure" and other pet projects that have only the most tangential connection to healthcare, much less the coronavirus) has put a huge weight on the US economy that it will take at least a generation to overcome. Virtually all our current economic woes (inflation, supply chain disruptions, business failures, historically low labor participation rates) are directly attributable not to Covid, but to the Covid response.

What we need is more clear and specific guidance from OCR, ONC, and HHS generally on what to do. The 405(d) program is great, but should be more specifically tied to what constitutes "reasonable safeguards" under the Security Rule. OCR need not abandon the flexibility granted in 45 CFR 164.306(b), but could provide a "safe harbor" reference to a concise and current list of specific security practices. Subpart C of 45 CFR Part 164 (the core Security Rule provisions, 164.302 et seq.), is clear and concise, and a fraction of the size of Subpart E (the Privacy Rule provisions), but finding your way to the specific technical guidance in the 405(d) program (or wading through the dozens of overly-wordy HHS data security resources) can take a lifetime.

Most of us who practice regularly in healthcare cybersecurity are aware of the 405(b) program and the technical guidance for small, medium, and large healthcare organizations, but very few providers are aware of it. Turning the technical volume attachments into a safe harbor would go a long way toward alleviating some of the health industry's ransmoware exposure and risk.

Jeff [9:17 AM]

[ Wednesday, December 07, 2022 ]

Business Associates Get Hacked, are Threat Vectors: Half of the most recent 10 big HIPAA breaches involved business associates. As a covered entity, your first task is to make sure you have BAAs in place with your vendors. But the second task is to make sure your business associates aren't risky. They may be your weakest link, and your patients won't be happy with your excuse that "it wasn't my fault, it was the guys I hired and gave your data to."

Jeff [1:25 PM]

[ Monday, December 05, 2022 ]

Tracking Technologies: HHS has issued guidance to HIPAA covered entities and business associates regarding the risks of using tracking technologies to understand patient activities and behaviors, including when pixel use (such as with Facebook Pixel/Meta Pixel advertising tools). I haven't fully digested the guidance but will update this post when I do.

Jeff [8:52 AM]

[ Wednesday, November 30, 2022 ]

Part 2: OCR announced earlier this week that they want to revise "Part 2" to more closely align with HIPAA. For those who don't know, 42 CFR Part 2 is a federal regulation that prohibits the disclosure of information regarding patients at federally-supported substance abuse treatment facilities. It's a remnant of an era when the government was concerned that drug addicts would not seek treatment due to fear that their presence at a drug treatment facility would be used as proof of drug crimes. Part 2 serves as sort of a super-HIPAA: with few exceptions, no data can be released about a patient without the patient's consent.

I haven't yet read what OCR's proposing, but I'll let you know what I think when I do.

Jeff [6:15 PM]

[ Tuesday, November 08, 2022 ]

8 Common-Sense Ideas for Defending Against Cyberattacks: This is focused on hospitals, which are seeing a rash of attacks, but these steps will work for every organization.

Jeff [8:12 AM]

[ Thursday, October 27, 2022 ]

Data minimization and the Drizly case. News hit earlier this week when a proposed settlement between the Federal Trade Commission (FTC) and the Uber alcohol-delivery subsidiary Drizly was disclosed. The consent order is remarkable on its face because it applies to the CEO of the company both while at Drizly and at any other company where he takes a management role. While that is very unusual, of greater importance is probably the focus of the FTC on data minimization.

Drizly suffered a data breach when a hacker got the credentials of an employee and was able to log on and access a lot of information about Drizly's customers. And Drizly collected a lot of customer and employee information -- much more information than Drizly needed to deliver drinks to thirsty customers. The proposed consent order will require Drizly to limit the data it collects and keeps and requires James Cory Rellas to implement similar restrictions at any future employer.

The FTC's goal is data minimization. Often companies will collect more information than they need to do the job at hand, because it might otherwise be valuable at some point in the future for basic or new purposes. This is particularly true at initial customer sign-in, or with start-up companies, since they don't know what data them might find useful in the future, and they might not be able to collect it later.

However, while that data may or may not be valuable to the company, there's a truism in data privacy that pushes in the opposite direction: you cannot lose what you don't have. A data breach can only get the data that is in the database, so the less data you retain, the less you have to protect.

Expect to see not only the FTC, but other privacy enforcement agencies focus more often on data minimization as a breach mitigation strategy.

Jeff [2:01 PM] http://www.blogger.com/template-edit.g?blogID=3380636 Blogger: HIPAA Blog - Edit your Template